On May 30th, U.S.-based A.I. company OpenAI released a threat report called “Disrupting Deceptive Uses of AI by Covert Influence Operations.” The report provided details on three countries and one company that used ChatGPT’s A.I. models to conduct Influence Operations (IO) against various groups. These countries are China, Iran, and Russia, while OpenAI also said an Israeli company, STOIC, was involved in the operation. The business also provided details regarding the attacker and defender trends that OpenAI saw during their investigations.

Countries and Business Involved in the Influence Operations

China: Spamouflage

China conducted an operation called Spamouflage that posted A.I.-generated content on various social media apps and blogs that criticized Chinese dissidents and spread disinformation. The FBI also attributed some of the social media activity to the country’s Ministry of Public Security (MPS). The network mainly created content in Chinese, but also in English, Japanese, and Korean to a lesser extent. The threat actor used OpenAI’s models for several purposes, such as debugging code, asking for advice regarding social media analysis, conducting research on current events and news, and developing content for publication on various blogs and social media. The actor created various articles about Japan releasing wastewater from the Fukushima nuclear power plant that allegedly damaged the region’s marine environment. The actor posted these articles on various blog forums, such as ameblo.jp, Blogspot, and Medium.

The investigators also provided an example to show the method by which the threat actor used A.I. models to post fake comments that criticized dissidents. The example they used occurred in April 2024, when the group posted several English comments that criticized Cai Xia, a Chinese dissident. The actor posted several English comments that criticized a Chinese dissident in April 2024. The investigators identified one bot account that posted the initial comment and a cluster of other bot accounts that then posted replies. However, the investigators pointed out that no real accounts replied to the bots’ comments.

The threat group also used OpenAI’s models to “conduct open-source research” for various purposes. The purposes range from asking the models to create summaries of social media posts by “critics of the Chinese government” to receiving advice related to applying for developer accounts on social media. However, investigators pointed out that the group’s use of A.I. to generate content for disinformation purposes made up a minority of its overall output. One example they used to illustrate their argument was that the Medium and X accounts contained a higher overall volume of disinformation manually created in Chinese and English compared to A.I.-generated content. Furthermore, the manually created content also contained “unidiomatic use of English.”

Most of the Chinese content the group created for disinformation purposes ranged from praising the Chinese government to criticizing the United States’ actions. The group created content in foreign languages such as English, Japanese, Korean, and Russian for use against dissidents and critics, as well as to spread disinformation. One example is the group’s creation of “positive comments in Chinese” about the Minister of Public Security’s visit to Uzbekistan. Another notable example is the group’s use of the models as a test assignment for Chinese Communist Party (CCP) members.

The investigation pointed out that Spamouflage is “the world’s most intensively researched IO since it was first publicly described in 2019.” However, little of its activity “has ever been reported as reaching authentic audiences,” and “the use of A.I.-generated content does not appear to have changed that dynamic.” For example, none of the high levels of engagement or follows from articles, blogs, and social media associated with the group were from real people. The investigators said the group’s operation would fall under the Breakout Scale Category Two operation since none of the disinformation posted by the group was significantly amplified by individuals outside the network.

Iran: International Union of Virtual Media

OpenAI banned a small number of accounts that were associated with the International Union of Virtual Media (IUVM), an Iranian group that various segments of the open-source community have studied in some capacity since 2018. The IUVM’s campaign targeted audiences throughout the world and focused on content made in English and French. The group used OpenAI’s models to create and proofread articles, headlines, and website tags that it published on its current website. The group’s MO is to create articles one day before publishing them to the websites, but create the tags immediately before their publication. However, the investigators point out that the group likely used automation or lack of proofreading to create the tags due to the inclusion of OpenAI’s response message in several tags.

IUVM created content that is described as anti-U.S. and anti-Israel while praising Iran, the Palestinians, and the “Axis of Resistance.” The group’s presence was reduced by the FBI’s seizure of its domains and by repeated social media takedowns. However, IUVM still maintains a current website as well as associated accounts on TikTok, VKontakte, and Odnoklassniki. These accounts had a low follower count, with 10, 76, and 274 followers, respectively. The investigators said IUVM’s operation would be considered a Category Two operation on the Breakout Scale.

Russia: Bad Grammar and Doppleganger

The report listed two IOs that Russia conducted using OpenAI’s models: Bad Grammar and Doppelganger. Investigators named the first IO ‘Bad Grammar’ due to the group’s “repeated posting of ungrammatical English and its struggle to build an audience.” The company banned the accounts attributed to this IO since they used the models to create comments to post on Telegram. The entity targeted audiences in the Baltic States, Moldova, Russia, Ukraine, and the United States with content made in English and Russian. The group used OpenAI’s accounts and models to create comment-spamming pipelines on Telegram. They accomplished this by using the models to debug coding that was designed to automate posting on the app. The users then generate messages in English and Russian to reply to certain Telegram posts using at least 12 accounts.

The groups used OpenAI’s models to create English comments in the voice of several fake personas that belonged to different demographics from both sides of the U.S. political spectrum. Furthermore, the English comments focused on economic hardship, immigration, and other topics to argue that the United States should not support Ukraine. However, the group created content in Russian that is “more thematic, less persona-based instructions.” The group made comments on Telegram, such as on the pro-Russian channel Slavyangrad and conservative channel SGTNewsNetwork. The group used the models to create short comments that focused on a “handful of political themes.” One of the main topics that appeared in both English and Russian was the Ukrainian conflict, the “political situation in Moldova and the Baltic States, and politics in the United States.” For example, some of the Russian comments accused the presidents of Moldova and Ukraine of corruption and betraying their own people to Western interference.

The group would also use multiple personas to comment on the same post and give conflicting points of view on the topics. This two-faced approach is a common tactic in Russian IOs and is used to increase division among audiences. One point about Bad Grammar is how it used a “novel technique” to create comments but struggled to attract engagement. Few of the group’s comments received any likes or replies, and the comments were usually a minority of replies to any one post. The group used OpenAI’s models to create private messages with another user on Telegram. However, the text suggested that the group communicated with a cryptocurrency scammer via private messages. The investigators considered the Bad Grammar operation to be in Category One on the Breakout Scale since the group posted content on a single platform but did not receive significant amplification.

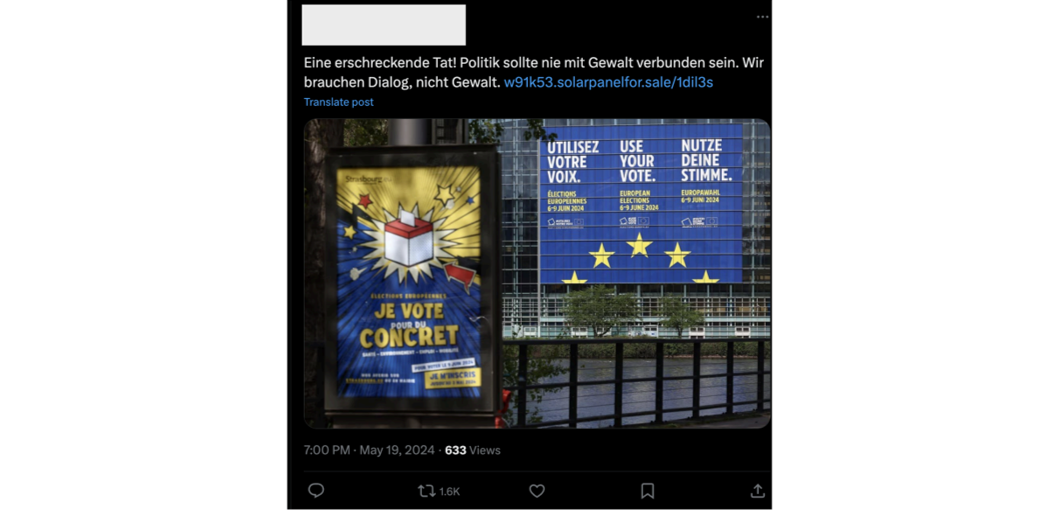

OpenAI also banned “four clusters of accounts” that used the company’s models for the Doppelganger operation. However, each of the clusters conducted IO using different Tactics, Techniques, and Procedures since they are made up of different teams. The group targeted audiences in Europe and North America and created content for use on social media and websites. The first cluster created “short text comments” in English, French, German, Italian, and Polish and posted them on 9GAG and X with links, memes, and videos that were not created using OpenAI models.

The cluster also posted comments to X using accounts that previously only made one or two posts each and had a random link attached. The links would redirect individuals to websites that have been “attributed to Doppelganger.” However, the websites were geofenced, so only individuals with an IP address for the targeted country could view the sites. The individuals may have improperly geofenced the websites since some English, German, and Polish articles can only be viewed from a French IP address. The cluster also accessed OpenAI’s models using a service linked to Czechia and Russian firms to evade detection and circumvent the company’s supported countries access policy.

The second cluster translated Russian articles into English and French. The group published the translated articles on a website attributed to Doppelganger. The cluster also created short comments about the articles they posted on social media. The third cluster was used to create, “proofread, and correct French-language articles” and then publish them on a website associated with Doppelganger. The fourth cluster operated in the German language and took in content from a Doppelganger website. The group would then use the information to create short posts for a Facebook Group and Page named after the website.

Most of the content the group published focused heavily on the Ukrainian conflict, where it “portrayed Ukraine, the US, NATO, and the EU in a negative light and Russia in a positive light.” Furthermore, the group also attempted to use OpenAI models to create cartoon images of European politicians and critics of Russia. However, the models refused the group’s requests. The four clusters that consisted of the Doppelganger group have not received significant positive engagement from “authentic audiences.” For example, the wide discrepancy in engagement points to inauthentic amplification. The investigators labeled the Doppelganger operation as a Category Two operation on the Breakout Scale.

Israel: Zero Zeno and STOIC

The company banned several accounts originating in Israel that were used to create and edit content for use in an IO on Facebook, Instagram, various websites, X, and YouTube. The organization was operated by an Israeli political research and management company called STOIC. The investigators named the operation Zero Zeno “in honor of the founder of the stoic school of philosophy, and to reflect the low levels of engagement that the network attracted.” The company’s operation created English and Hebrew language content for audiences in Canada, Israel, and the United States. However, STOIC began to develop English-language content to target audiences in India and was in the early stages of an IO campaign to target audiences in Ghana. The company utilized OpenAI’s models to create social media comments and web articles that would be posted on several platforms, such as Facebook, Instagram, X, and YouTube.

STOIC also used the AI models to develop fake personas for social media accounts based on several variables, such as age, gender, and location. However, the account’s pictures were likely created using an earlier type of AI called Generative Adversarial Network (GAN). The firm also used the models to conduct research into people in Israel who commented on an Israeli trade union board. The company used the personas to create accounts on Facebook, Instagram, and X so they could post disinformation on the platforms. The company used the same picture to create several profiles, and sometimes multiple accounts with the same profile picture would comment on the same social media post. The investigators identified the fake accounts through replies to posts made by the company, possibly to create the impression of audience engagement. They also posted similar content on four websites that “posed as activist groups focused on the Gaza conflict and broader issues of relations between individuals of Jewish and Muslim faith.”

The company conducted this operation in several subsets, ranging from the Gaza conflict and Jewish and Muslim relations to the Indian elections. However, the investigators pointed out that OpenAI disrupted “some activity” that was focused on the elections less than 24 hours after the campaign began. Regarding the other operations, the company also released content that criticized the UN agency for Palestine Refugees (UNRWA). Furthermore, STOIC also created content that focused on Canada and criticized the “radical Islamists” living in the country. Another subset of the operation focused on US universities and “accused pro-Palestinian protesters of promoting antisemitism and terrorism.”

The operators would routinely switch between commenting on posts about different countries or topics using the same profiles. For example, the same accounts that made posts about Canada would then switch to making posts about the United States, India, or Israeli trade unions. The accounts would also write replies to comments made on Western political figures’ profiles that had no relation to the original post. The report gave the example of one fake account that posted about “Qatar ‘buying up’ America” in a reply to a post about former US President Trump’s musical preferences. The operation’s activity attracted little engagement “other than from its own inauthentic accounts.” The investigators labeled Zero Zeno as a Category Two operation on the Breakout Scale.

Analysis

The five cases illustrate how various countries are increasingly incorporating open-source generative A.I. programs such as ChatGPT into their influence operations. Furthermore, the STOIC case shows how companies would be tasked with conducting IOs against countries or political parties. The Spamouflage operation by China’s MPS shows how the country will leverage generative A.I. programs to conduct various aspects of the disinformation and influence operations. For example, the group used the models to conduct research on Chinese dissidents and create content for its various operations. The group chose to use the models to gather information on their targets more efficiently.

The increased efficiency would enable the creators to develop disinformation in a faster, more focused manner. For example, the investigation found that the organization targeted specific dissidents who spoke out against China after gathering information regarding what the dissidents said. This would mean that the MPS is actively conducting IO against dissidents to prevent their content from gaining traction and spreading to a wide audience. The disinformation operations point to China expanding its use of A.I. to spread disinformation regarding various topics, such as the release of waters from the Fukushima nuclear power plant. Furthermore, Spamouflage shows China’s willingness to use generative A.I. to conduct disinformation operations against various countries.

The operation conducted by IUVM shows how Iran is beginning to use generative A.I. to conduct disinformation and influence operations. In 2020, the United States sanctioned the group for spreading disinformation. The Islamic Revolutionary Guard Corps-Quds Force (IRGC-QF)-connected group was sanctioned due to its spreading disinformation related to COVID-19 and the 2020 U.S. presidential elections. This latest iteration aimed to spread disinformation against both Israel and the United States while praising Iran. However, the repeated takedowns and removals by the FBI and other entities reduced their capacity to reach audiences. Furthermore, there are indications that the individuals are still learning how to incorporate A.I. into various aspects of their IOs. The most notable example is OpenAI’s response messages used in the tags of articles. The lack of proofreading may indicate that the individuals thought the model would remove the responses or did not put any thought into proofreading beyond the articles.

The report’s most significant case involves the campaigns that the Israeli company STOIC conducted against domestic and foreign targets. Its campaign to influence the Indian elections by criticizing the BJP was likely conducted on behalf of someone connected to or associated with the Indian National Congress party. OpenAI found and banned the accounts used to conduct the operation when it was in the early stages of deployment, which mitigated the impact it could have on the elections. STOIC’s campaigns related to the ongoing conflict in Gaza illustrate how companies can help governments achieve their geopolitical goals. The company created content that criticized UNRWA for its role in both the October 7th attack and assisting Hamas. STOIC’s goal was to implicate UNRWA in both the attack and to show it had a history of aiding Hamas to turn public opinion against the organization. Another example is the campaign against American universities, which allowed pro-Palestinian protests to occur on their campuses. The campaign’s goal is to turn public opinion against the universities by publicly shaming them.

The five cases illustrate how various countries are increasingly incorporating open-source generative AI programs such as ChatGPT into their influence operations. They also demonstrate how the countries and political parties will use AI to fulfill various geopolitical objectives. The Spamouflage operation by China’s MPS shows how the country will leverage generative AI programs to conduct various aspects of disinformation and influence operations. For example, the group used the models to conduct research on Chinese dissidents and create content for its operations. The group chose to use the models to gather information on their targets more efficiently. The increased efficiency enabled the creators to develop disinformation in a faster, more focused manner. For example, the investigation found that the organization targeted specific dissidents who spoke out against China after gathering information regarding what the dissidents said. This would mean that the MPS is actively conducting IO against dissidents to prevent their content from gaining traction and spreading to a wide audience. The operations also allow China to spread disinformation to fulfill its geopolitical objectives, such as decreasing the influence of other countries. However, the relatively low amount of AI-generated content also indicates that China is still determining how to incorporate the content into IOs and other disinformation campaigns.

The operation conducted by IUVM shows how Iran is beginning to use generative AI to conduct disinformation and influence operations. In 2020, the United States sanctioned the Islamic Revolutionary Guard Corps-Quds Force (IRGC-QF)-connected group for spreading disinformation related to COVID-19 and the 2020 US presidential elections. This latest iteration aimed to spread disinformation against both Israel and the United States while praising Iran. However, the repeated takedowns and removals by the FBI and other entities reduced their capacity to reach audiences. Furthermore, there are indications that the individuals are still learning how to incorporate AI into various aspects of their IOs. The most notable example is OpenAI’s response messages remaining in the tags of articles. The lack of proofreading may indicate that the individuals thought the model would remove the responses or did not put any thought into proofreading beyond the articles.

The Russian operations, Bad Grammar and Doppelganger, point to Russia’s continued use of IOs in a quantitative manner to spread its disinformation. However, the campaigns also indicate that Russia is transferring the TTPs it utilizes for use in AI-based IOs. For example, the individuals targeted populations in Europe, Ukraine, and the United States with AI-created content, which matches previous campaigns conducted by Russia to achieve its geopolitical objectives. These campaigns were specifically aimed at dividing people along political beliefs or lines to cause division in the targeted countries. Russia’s emphasis on creating content about the Ukrainian conflict reflects this notion, since Russia’s goal is for the United States and other countries to reduce or stop their assistance to Ukraine. However, both campaigns also illustrate the limitations that Russia has regarding how to use AI in its operations. The content for both campaigns did not expand to new audiences and did not generate any views or interactions beyond their own network.

The report’s most significant case involve the campaigns that the Israeli company STOIC conducted against domestic and foreign targets. Its campaign to influence the Indian elections by criticizing the Bharatiya Janata Party (BJP) political party was probably conducted on behalf of someone connected to or associated with the Indian National Congress party. OpenAI found and banned the accounts used to conduct the operation when it was in the early stages of deployment, which mitigated the impact it could have on the elections. STOIC’s campaigns related to the ongoing conflict in Gaza illustrate how companies can help governments achieve their geopolitical goals. The company created content that criticized UNRWA for its role in both the October 7th attack and assisting Hamas. STOIC’s goal was to implicate UNRWA in the attack and to show it has a history of aiding Hamas to turn public opinion against the organization. Another example is the campaign against American universities, which allowed pro-Palestinian protests to occur on their campuses. The campaign’s goal probably was to turn public opinion against the universities by publicly shaming them.

STOIC’s involvement in the campaigns also illustrates an ethical gray area that companies find themselves in when conducting these operations. While STOIC does advertise AI content generation and political campaign management as solutions it provides, the campaigns go beyond the scope of what a company would typically do. For example, the company’s website says it conducts political management, research, and strategy for its clients using AI-based solutions. The company’s STOIC AI feature allows it to automatically produce content to target specific audiences with the new messages. However, the software would likely allow the company or users to further modify the content to target audiences more specifically, creating an opening for mis-and dis-information generation.